The EU’s Artificial Intelligence Act

Context:

- This week, members of the European Parliament reached an early agreement on a new draught of the European Union’s ambitious Artificial Intelligence Act.

-

Many AI tools are essentially black boxes, which means that even those who designed them cannot explain what happens inside them to produce a specific output.

- The proposed legislation aims to achieve a balance between encouraging “AI adoption while mitigating or preventing harms associated with certain applications of the technology.”

Points to Ponder:

Chat GPT:

- ChatGPT is an artificial intelligence-powered chatbot that may be used to ask questions.

- ChatGPT was created by OpenAI, a research centre and corporation dedicated to the responsible and safe development of artificial intelligence technology.

- Elon Musk, Sam Altman, and Greg Brockman were among the entrepreneurs and researchers who created it in 2015.

- It is capable of providing immediate solutions to complex problems, almost like a personal know-it-all tutor; some have compared it to Google.

- ChatGPT can answer follow-up inquiries, admit mistakes, dispute faulty premises, and reject unsuitable requests thanks to the dialogue style.

- It is more interesting, produces more detailed language, and can even generate poems.

- You can, for example, ask it to write a programme or a small software application.

- It can also perform creative tasks like composing a story.

- It can explain scientific topics and provide factual answers to any issue.

EU’s Artificial Intelligence Act

- The European Parliament has secured an early agreement on a new draught of the Artificial Intelligence Act.

- The legislation was first written two years ago to bring openness, trust, and responsibility to artificial intelligence and create a framework to manage risks to the EU’s safety, health, basic rights, and democratic principles.

- The draught Act intends to address ethical issues as well as implementation obstacles in a variety of areas, including healthcare, education, finance, and energy.

- The Act aims to find a balance between fostering AI use and minimising or preventing damages connected with specific applications of the technology.

- The Act defines AI generally as “software developed with one or more of the techniques that can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with.”

- The Act defines AI technologies based on machine learning and deep learning, as well as knowledge, logic, and statistical methodologies.

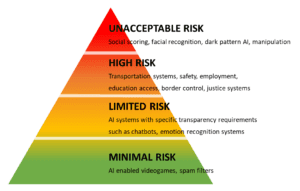

- The Act categorises AI technology based on the level of risk it poses to a person’s “health and safety or fundamental rights,” with four risk categories: unacceptable, high, limited, and low.

- The Act, with few exceptions, prohibits the use of technologies in the unacceptable risk category, such as real-time facial and biometric identification systems in public spaces and government systems of social scoring of citizens that lead to “unjustified and disproportionate detrimental treatment.”

- The Act places a strong emphasis on AI in the high-risk category, establishing a variety of pre-and post-market regulations for AI developers and users.

- Biometric identification and categorization of natural individuals are examples of systems in this area, as are AI applications in healthcare, education, employment (recruitment), law enforcement, justice delivery systems, and tools that offer access to important private and public services.

- The Act envisions creating an EU-wide database of high-risk AI systems and providing specifications so that future or in-development technologies can be included if they fit the high-risk requirements.

- High-risk AI systems will be subjected to strict reviews known as ‘conformity assessments’ in the Act before they can be released to the public. These will include algorithmic impact assessments to analyse data sets fed to AI tools, biases, how users interact with the system, and the overall design and monitoring of system outputs.

- AI systems with limited or moderate risk, such as spam filters or video games, are permitted to be employed with a few restrictions, such as transparency standards.

Similar topics:

Directing AI For Better And Smarter Legislation