Crafting safe Generative AI systems

Context:

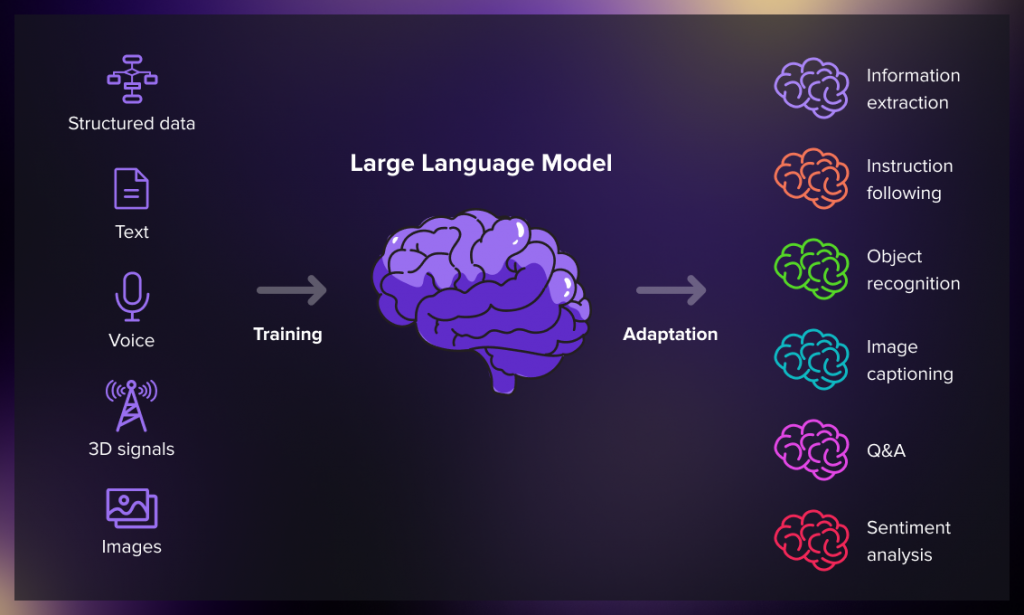

Forecasts, predict that Large Language Models (LLMs) could infuse the global economy with an impressive $2.6 trillion to $4.4 trillion annually. As an illustrative embodiment of this potential, the ongoing field test of the Jugalbandi Chatbot in rural India stands as a testament. This chatbot, harnessed by the power of ChatGPT, holds the promise of being a universal translator. It assimilates queries in local dialects, extracts answers from English resources, and delivers responses in the user’s native language.

Relevance:

GS-03 (Science and Technology)

Prelims:

- Generative Artificial Intelligence

- AI

- Generative Adversarial Network

- Variational Autoencoders (VAEs)

- National Strategy for Artificial Intelligence

Mains Question:

- Discuss the potential impact of Generative AI, highlighting its economic and societal implications. What role does the Jugalbandi Chatbot play in extending access to information and fostering economic inclusivity? (150 words)

Jugalbandi Chatbot:

- The Jugalbandi Chatbot is aimed at enhancing the accessibility of government services for residents in rural Indian villages.

- This indigenous artificial intelligence chatbot is designed to streamline interactions between individuals and government programs.

- Presently, the chatbot comprehends 10 out of the 22 officially recognized Indian languages and encompasses a wide spectrum of 171 government initiatives.

- The development of the Jugalbandi Chatbot is a collaborative effort involving both Microsoft Research and AI4Bharat, a government-supported open-source language AI center housed within the Indian Institute of Technology (IIT) Madras and OpenNyAI.

- Through this collaboration, the chatbot intends to provide a platform through which all Indians can conveniently access information in their local languages via mobile phones.

Key Features of Jugalbandi:

- The distinguishing feature of Jugalbandi lies in its fusion of AI capabilities derived from AI4Bharat and Microsoft Azure OpenAI Service. This integration facilitates smooth and coherent conversations between users and the chatbot.

- By harnessing the potential of generative AI models, the chatbot is capable of synthesizing vast volumes of data to generate textual content and other forms of information.

Dimensions of the Article:

- Addressing Concerns

- Deconstructing the Safety Paradigm

- The Identity Assurance Framework

- Information Integrity

Addressing Concerns:

- The emergence of AI-powered tools has blurred the lines between human and artificial entities in the digital world. Whether through speech, text, or video, the capacity for deception poses considerable risks.

- Malicious actors, capitalizing on this capability, can perpetrate various forms of subterfuge, including misinformation, disinformation, security breaches, fraud, hate speech, and public shaming.

- In the United States, an AI-generated image portraying the Pentagon in flames triggered unease in equity markets. On social media platforms like Twitter and Instagram, counterfeit accounts spewing divisive political stances have gained traction, deepening the divisions of online politics.

- The dexterity of cloned AI voices has enabled them to bypass conventional bank security measures. A tragic incident in Belgium, purportedly linked to conversations with an LLM, underscores the potentially profound emotional impact.

- Even electoral processes haven’t been immune, as evidenced by AI-generated deepfakes tarnishing recent elections in Turkey. With imminent elections on the horizon across the United States, India, the European Union, the United Kingdom, and Indonesia, the potential for ill-intentioned actors to manipulate Generative AI for disinformation campaigns looms large.

Deconstructing the Safety Paradigm:

- The crux of the safety discourse revolves around curtailing the misuse of AI tools to propagate misinformation and fabricated identities. Regrettably, the gamut of proposed solutions falls short in robustness.

- One regulatory proposal centers on mandating digital assistants, colloquially known as ‘bots,’ to self-identify and criminalizing the dissemination of falsified media. While these measures hold potential for fostering accountability, they remain inadequate in tackling the multifaceted challenge.

- While established companies may conform to the self-identification mandate and disseminate credible information, malevolent actors are likely to flout these regulations, leveraging the reputation of compliant entities to cloak their own devious intentions.

The Identity Assurance Framework:

- As the conversation extends beyond regulation, the imperative is to sculpt a landscape of enhanced internet safety and credibility.

- Drawing insights from recent research at the Harvard Kennedy School, we advocate for an identity assurance framework. This framework, premised on fostering trust among interacting entities, hinges on validating the authenticity of involved participants, thereby endowing them with confidence in their declared identities.

- Crucially, this framework must be adaptable to the plethora of credential types that burgeon across the globe. A key tenet is to remain technology-agnostic, eschewing fixation on a singular technological modality, while steadfastly safeguarding privacy.

- Notably, digital wallets assume a pivotal role, affording users the luxury of selective disclosure and a bulwark against both governmental and corporate surveillance. This framework extends its canopy to encompass humans, AI bots, and commercial enterprises, forming a comprehensive ecosystem of identity assurance.

Information Integrity:

- The bedrock of information integrity lies in affirming the authenticity of content and its alignment with its purported origin.

- This integrity derives strength from three core pillars. First, source validation establishes the provenance of information from recognized sources, publishers, or individuals. Second, content integrity ensures that the information remains untampered. Third, the realm of information validity, while contentious, can be fortified through mechanisms such as automated fact-checking and collaborative reviews by the community.

Way Forward:

- Achieving the twin goals of identity assurance and information integrity presents a formidable challenge. A fine balance between privacy and surveillance, threading the needle between civil liberties and security imperatives, and weighing the merits of anonymity against the demands of accountability is the need of the hour.

- Likewise, the domain of information integrity grapples with issues like censorship and the age-old question of who holds the authority to arbitrate truth. As we walk on the journey of recalibrating these two pillars in the digital expanse, we must acknowledge the divergence in values among nations, and their varying appetites for risks.

Conclusion:

- The mantle of ensuring secure and responsible deployment rests squarely upon the shoulders of global leaders. The endeavor mandates a reimagining of the criteria of safety assurance and the construction of a trust-based edifice. This transcends the confines of regulation, demanding the engineering of a culture of online safety. As we navigate the complexities of an AI-driven future, it is incumbent upon us to chart a trajectory that safeguards the integrity of identities and information, upholding the promise of progress while mitigating the perils that emerge on the horizon.